Studenti treće godine Odsjeka za telekomunikacije u sklopu nastavnih aktivnosti na predmetu Mikrovalni komunikacijski sistemi posjetili su BHTelecom. Više o samoj posjeti pročitajte OVDJE.

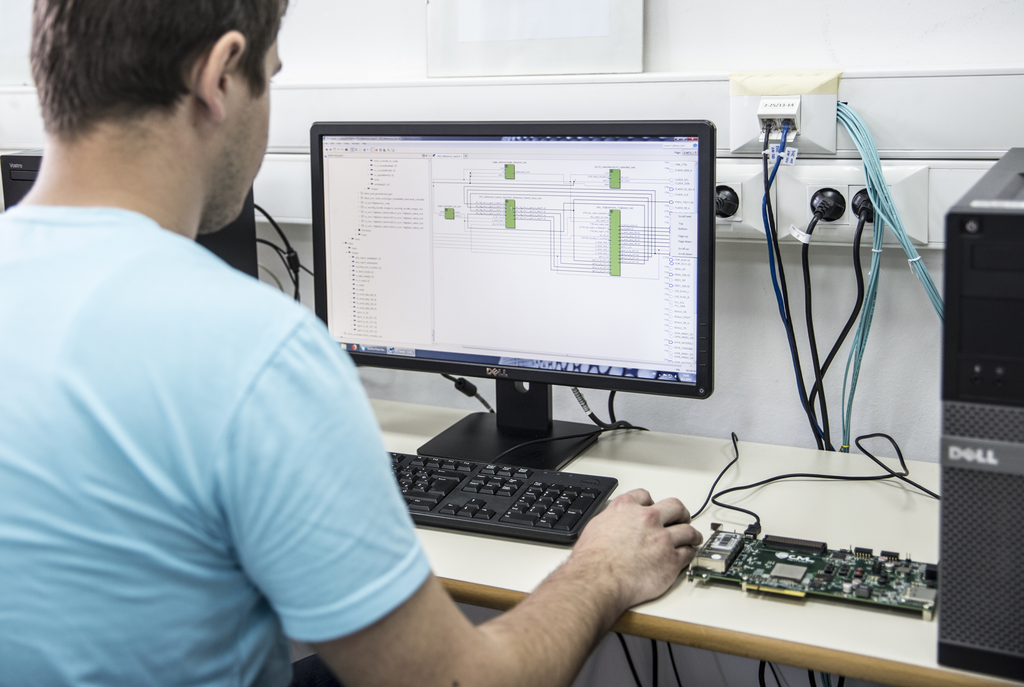

Studenati treće godine Odsjeka za telekomunikacije su 6. juna 2024. godine posjetili kompaniju MIBO u Sarajevu, gdje su se upoznali s praktičnim aspektima rada u telekomunikacijskoj industriji i tehničkim projektima kompanije.

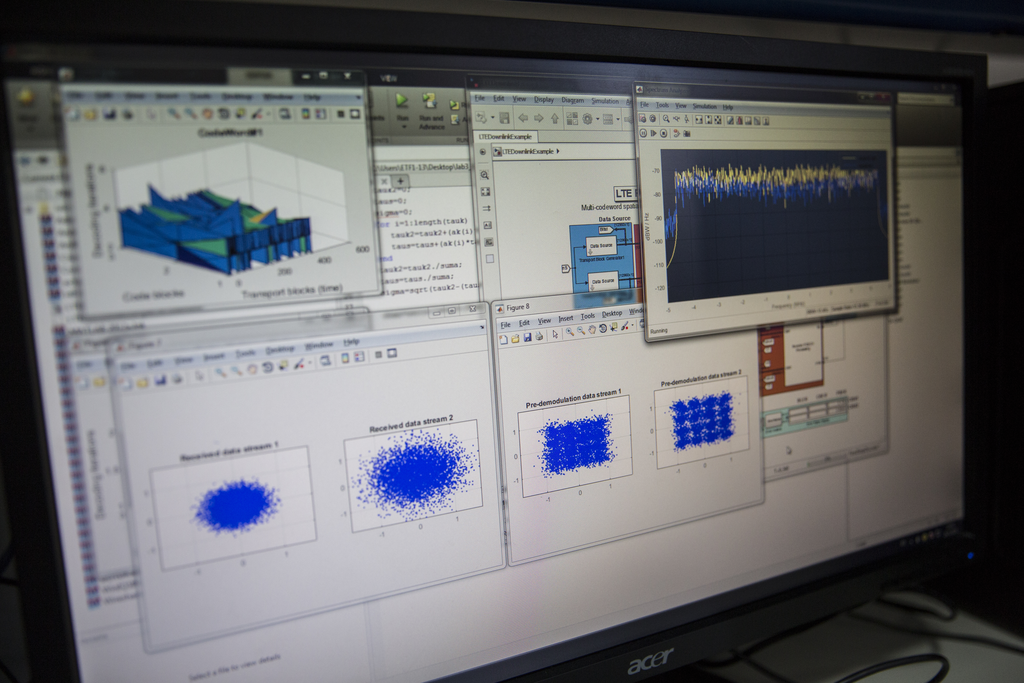

A. Maric, P. Njemcevic: Performance analysis of M‑ary RQAM and M‑ary DPSK receivers

over the channels with TWDP fading

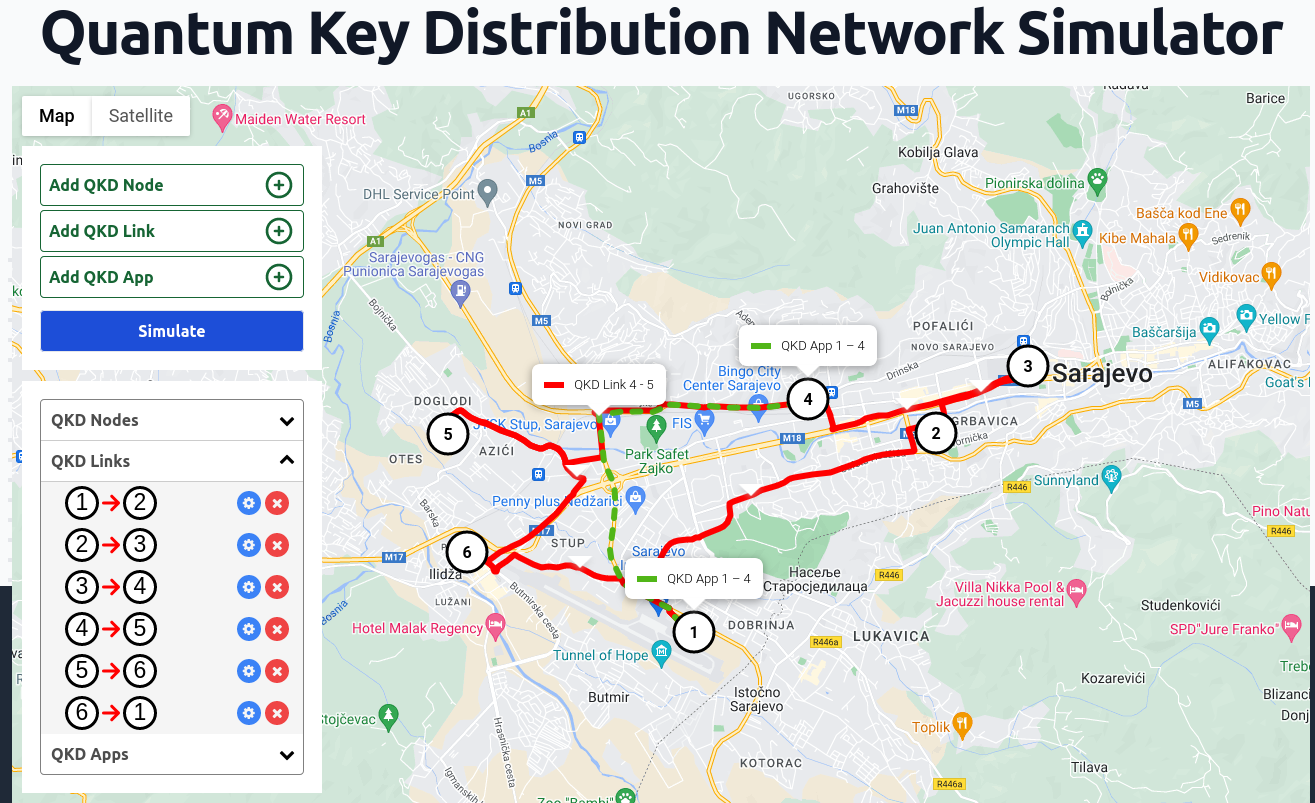

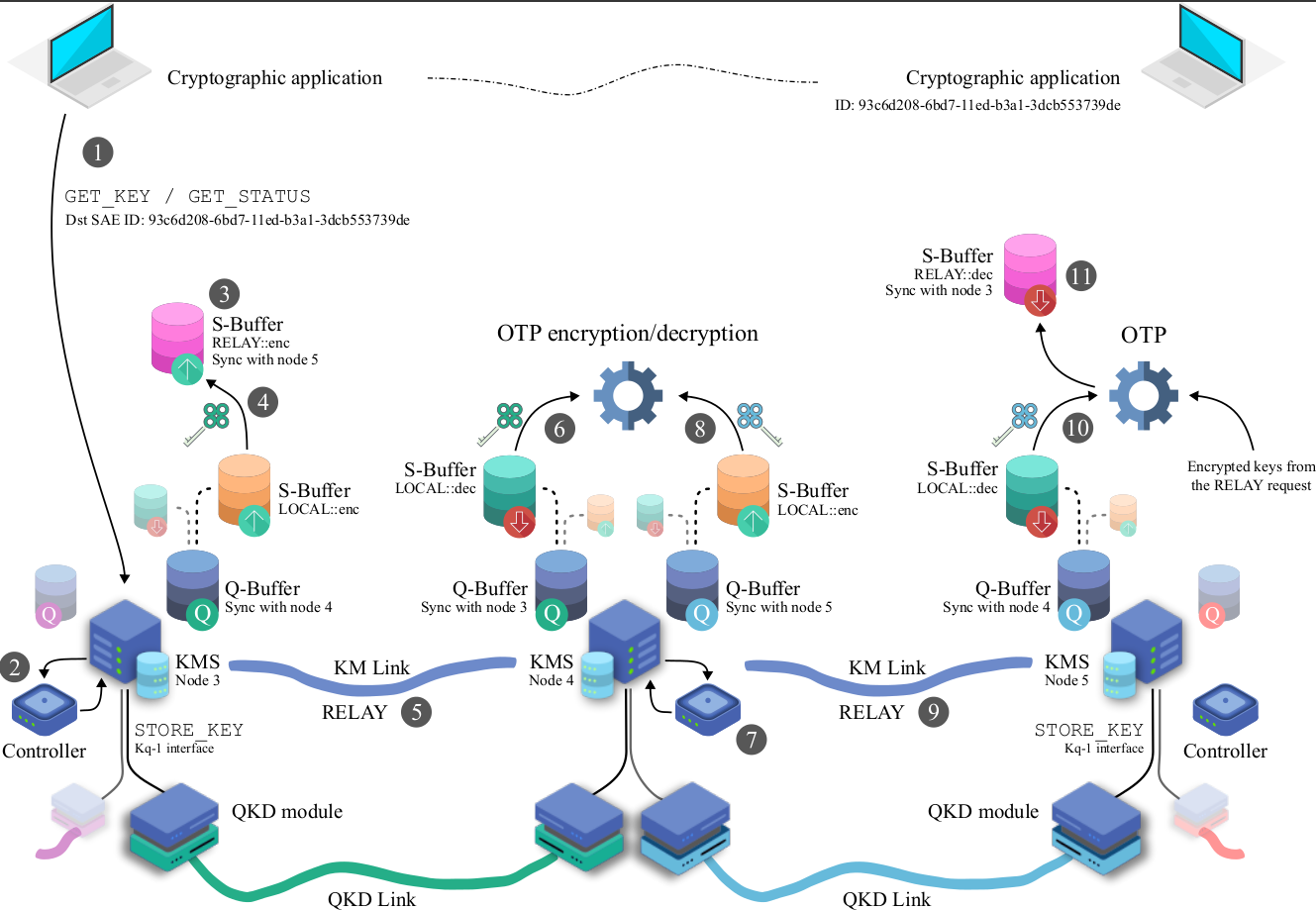

Objavljen rad u časopisu Journal of Optical Communications and Networking

Dana 28. decembra Zijad Alić, dipl.ing.el. će održati workshop na temu “Upravljanje modernim aplikacijama u Kubernetes okruženju koristeći aktuelne cloud platforme”. U okviru workshopa studenti će imali priliku da se upoznaju sa naprednim pristupima cloud sistemima. Svi studenti i zainteresovani

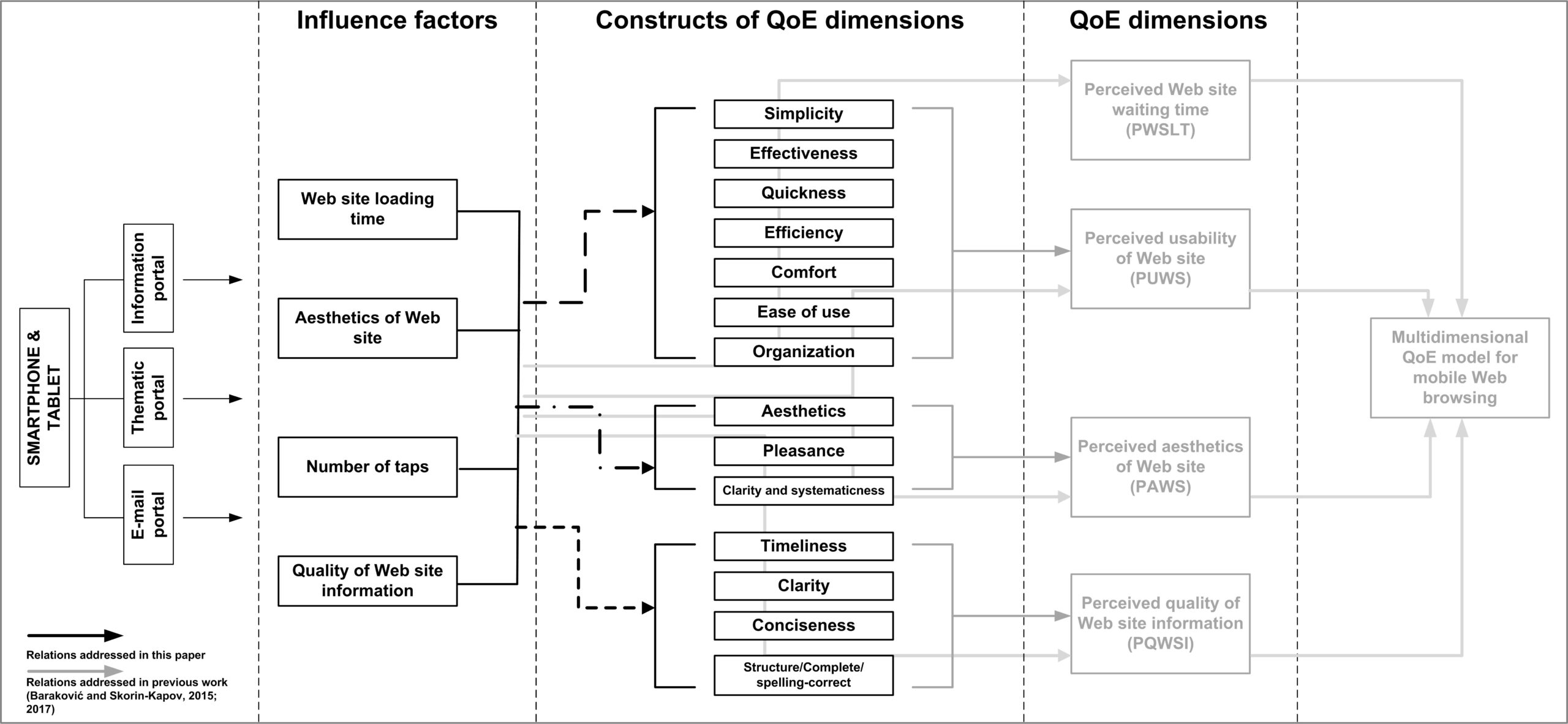

Članak pod naslovom “The Impact of QoE Factors on the Perception of Constructs Comprising Information Quality, Usability, and Aesthetics in Mobile Web Browsing” objavljen u Q1 časopisu – International Journal of Human–Computer Interaction.

New SPIE workshop - Recent Trends in Silicon Photonics, Fiber Optics, and High Power Laser Technologies

Novosti

IEEE Novosti

- Try IEEE’s New Virtual Testbed for 5G and 6G Tech

Telecom engineers and researchers face several challenges when it comes to testing their 5G and 6G […]

- Video Friday: Robot Baby With a Jet Pack

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE […]

- The Engineer Who Pins Down the Particles at the LHC

The Large Hadron Collider has transformed our understanding of physics since it began operating […]

O nama

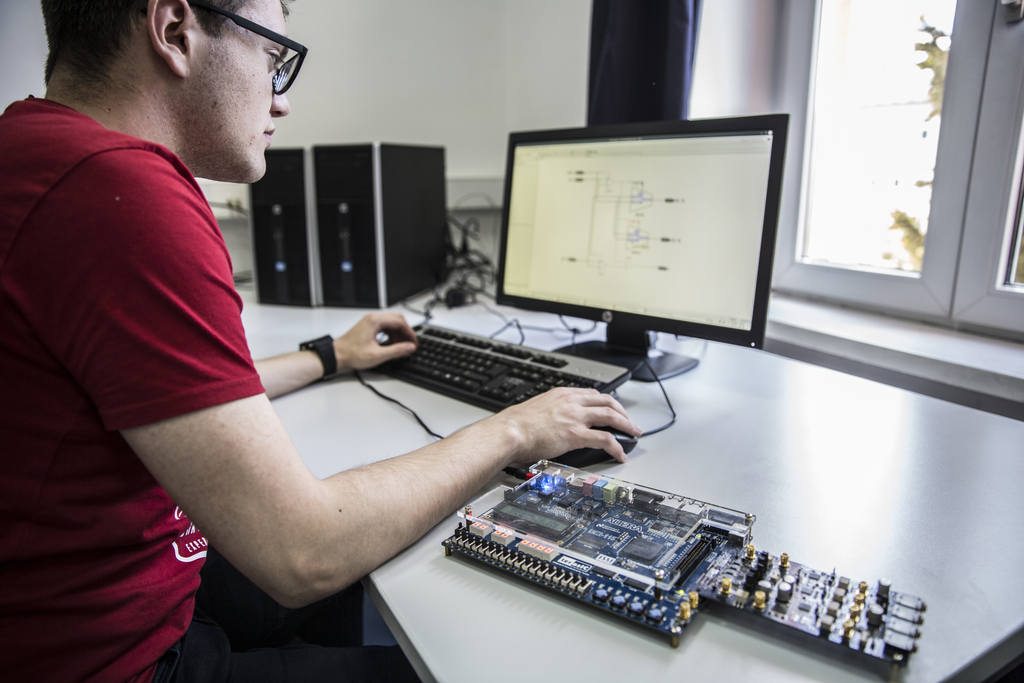

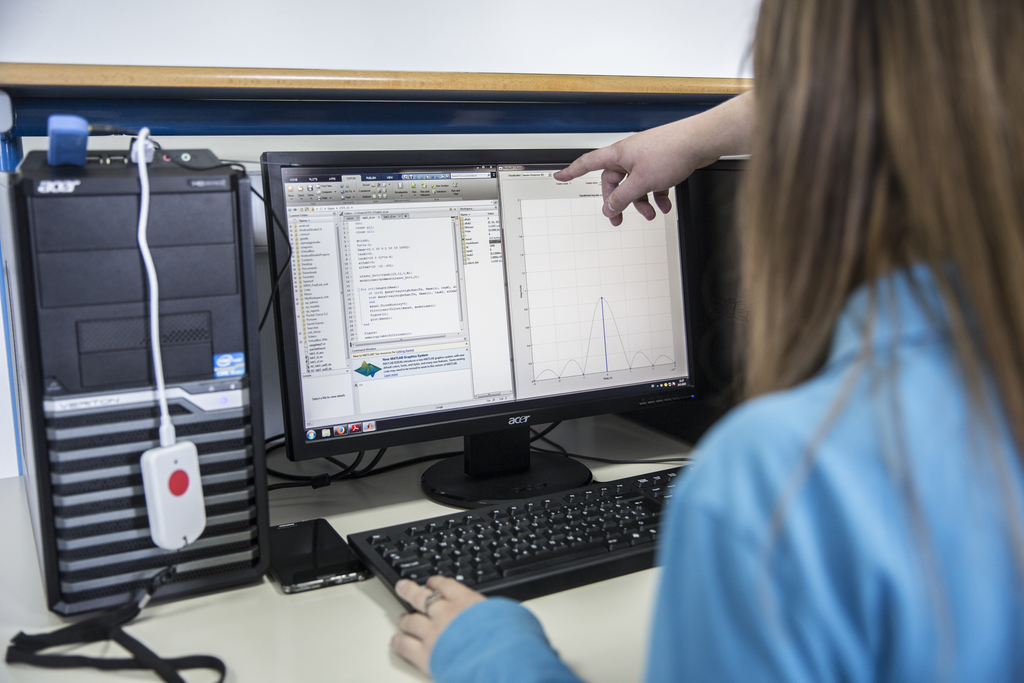

Odsjek za telekomunikacije osnovan je 1976. godine i jedan je od ukupno četiri odsjeka Elektrotehničkog fakulteta Univerziteta u Sarajevu. Od 2005. godine, nastavni proces je usklađen sa Bolonjskom deklaracijom te je nastavni proces podjeljen na bachelor i master studij. Bachleor studij, u trajanju od tri godine, orijentisan je ka sticanju fundamenata inžinjerske prakse i telekomunikacijskih znanja, a o njegovom kvalitetu govori i činjenica da je program akreditiran od strane ASIIN-a – njemačke članice europske asocijacije za osiguranje kvaliteta u visokom obrazovanju (ENQA). Master studij, u trajanju od dvije godine, orijentisan je praktičnom inžinjerskom radu i naučno-istraživačkim aktivnostima.