Third-year students of the Department of Telecommunications had visited BHTelecom as part of their teaching activities in Microwave Communication Systems course. You can read more about the visit HERE.

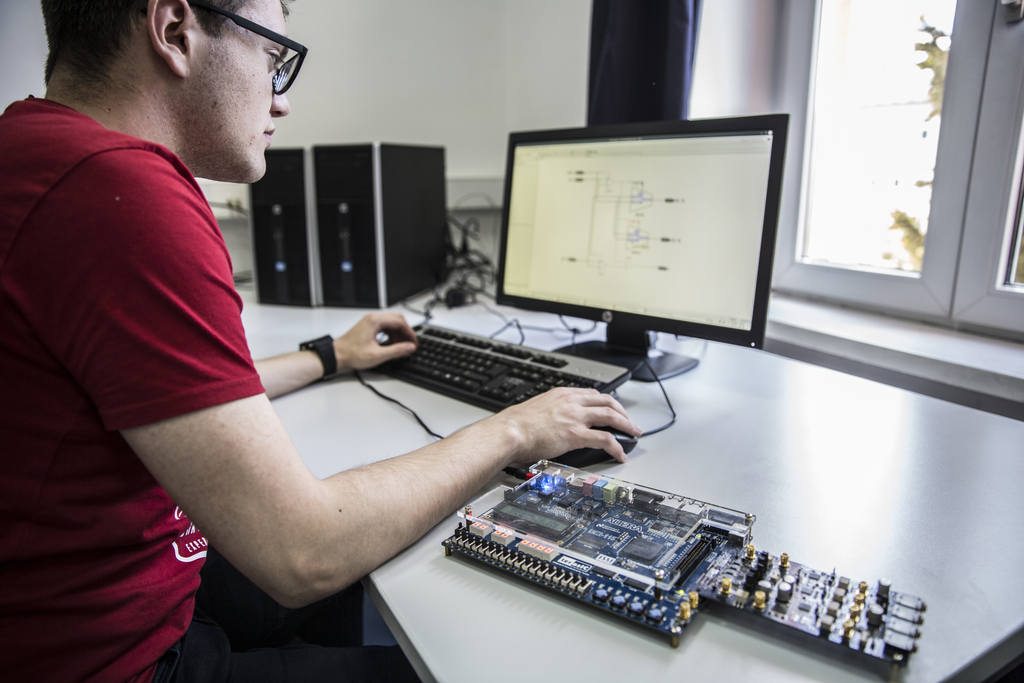

Third-year students from the Department of Telecommunications visited the MIBO company in Sarajevo on June 6, 2024, where they learned about the practical aspects of working in the telecommunications industry and the company's technical projects.

A. Maric, P. Njemcevic: Performance analysis of M‑ary RQAM and M‑ary DPSK receivers

over the channels with TWDP fading

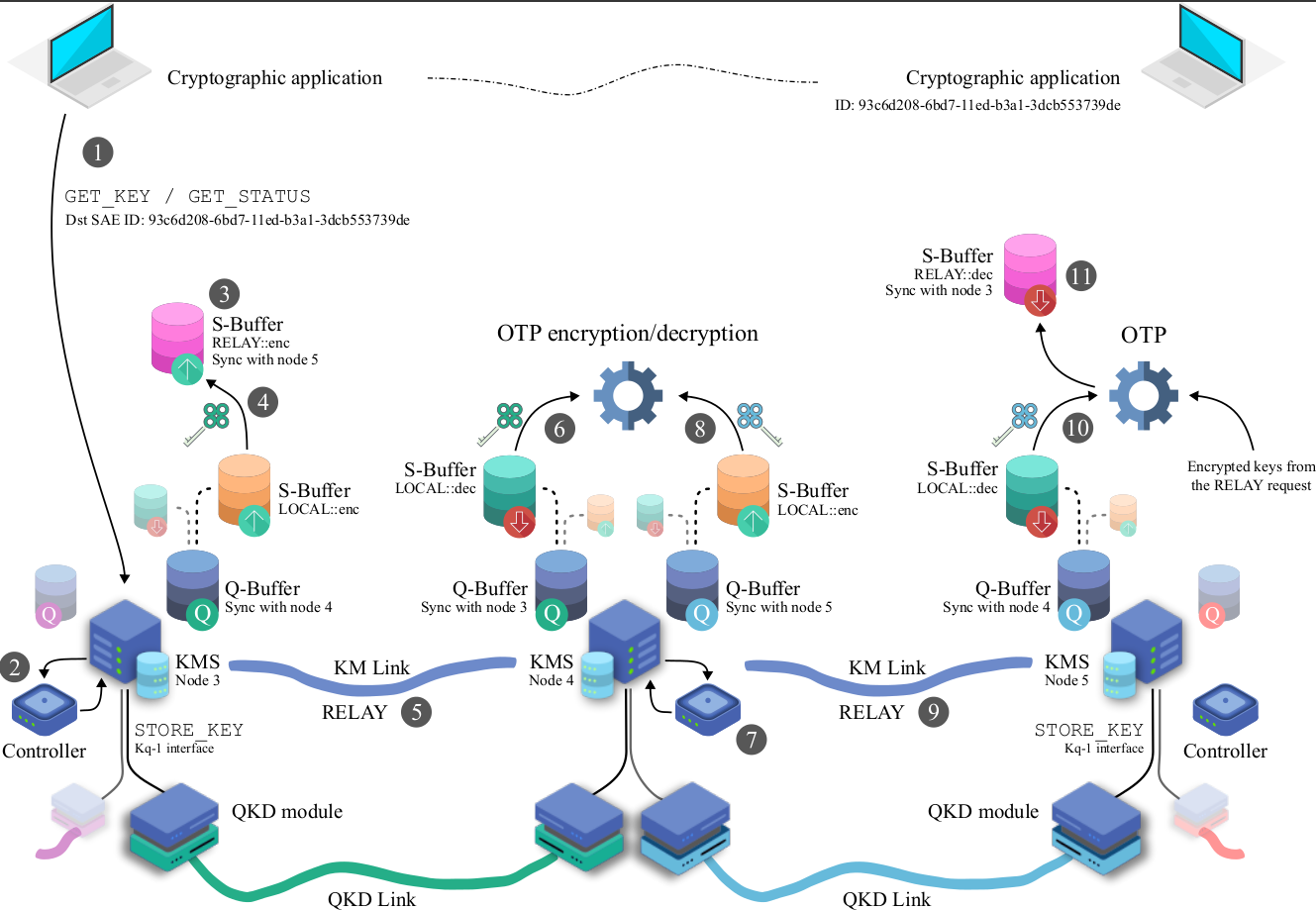

New article published in Journal of Optical Communications and Networking

On the 28th of May, ing. Zijad Alić will give a workshop on the topic “Management of modern applications in the Kubernetes environment using current cloud platforms”. As part of the presentation, students had the opportunity to become familiar with advanced approaches of cloud systems. All

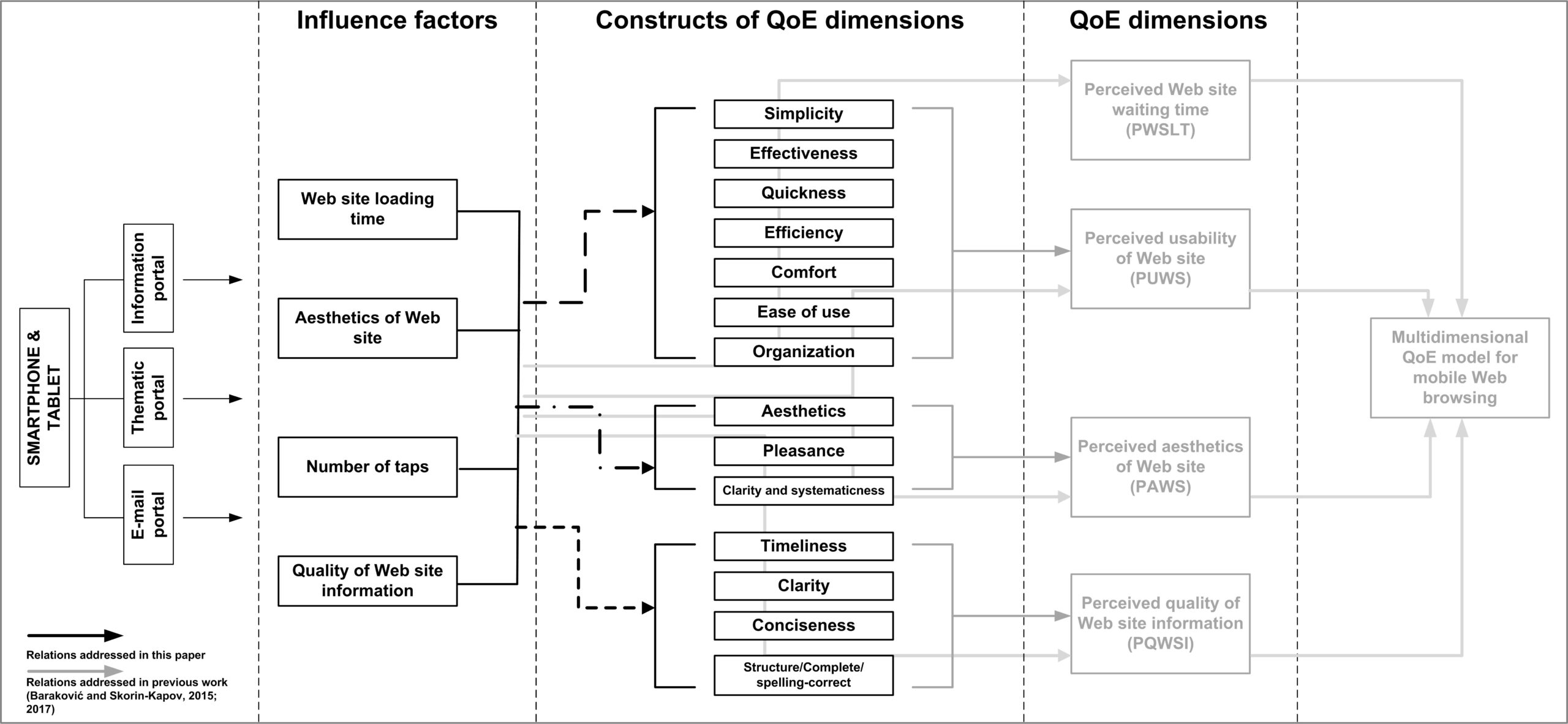

Article entitled “The Impact of QoE Factors on the Perception of Constructs Comprising Information Quality, Usability, and Aesthetics in Mobile Web Browsing” published in Q1 journal – International Journal of Human–Computer Interaction.

New SPIE workshop - Recent Trends in Silicon Photonics, Fiber Optics, and High Power Laser Technologies

News

IEEE News

- The Engineer Who Pins Down the Particles at the LHC

The Large Hadron Collider has transformed our understanding of physics since it began operating […]

- Why a Technical Master’s Degree Can Accelerate Your Engineering Career

This sponsored article is brought to you by Purdue University. Companies large and small are […]

- The Rise of Groupware

A version of this post originally appeared on Tedium, Ernie Smith’s newsletter, which hunts for […]

About Us

The Department of Telecommunications was founded in 1976, and it is one of four departments of the Faculty of Electrical Engineering, University of Sarajevo. Since 2005, study programs have been harmonized with the Bologna Declaration, and are divided into Bachelor, Master and Doctoral Studies.

The bachelor’s study program, with a duration of three years, is oriented towards fundamentals of engineering practice and telecommunication knowledge, and it is accredited by ASIIN – the German member of European Quality Assurance Association in Higher Education (ENQA). On the other hand, the master’s study program with a duration of two years is oriented towards practical engineering work and scientific-research activities.